Recently, I exchanged some e-mails with Anne van Kesteren about security models for the Web. He wrote his thoughts down in an interesting post on his blog titled Web Computing. This is sort of a reply to that post with my own thoughts.

Today, 10,000 days after, the first published Web is still working (congratulations! ^^). 10,000 days ago, JavaScript did not even exist but now we are about to welcome ES6 and the Web has more the 12,000 APIs.

Latecomers Service Workers, Push and Sync APIs have revitalized web sites to compete with their native counterparts but if we want to leverage the true potential of the Web we should increase the number of powerful APIs to stand at the same level of native platforms. But powerful APIs imply security risks.

To encourage the proposal and implementation of these new future APIs, an effective, non-exploitable revocation scheme is needed.

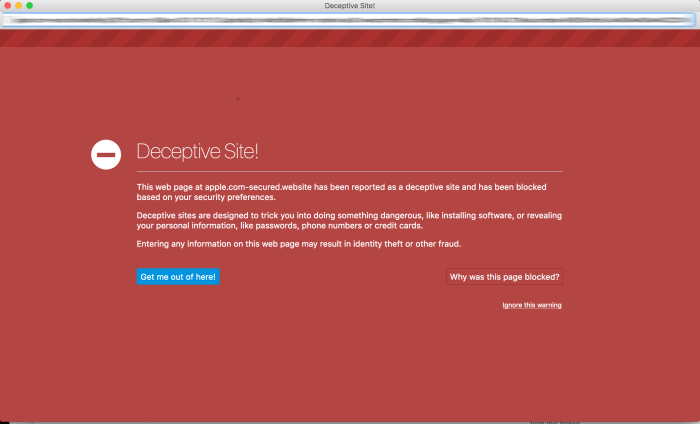

In the same way we can detect and block certain deceptive sites, we could extend this idea to code analysis. Relying on the same arguments supporting the success of open source communities, perhaps a decentralized network of security reviews and reviewers is doable.

This decentralized database (probably persisted by user agents) would declare the safeness of a web property based on the presumption of innocence which means, in roughly statistical terms, that our hypothesis should be "the web property is evil" and then try to find strong evidence to beat the null hypothesis "the web property is not evil".

Regardless the methodology chosen there will be two main categories: we have not enough evidence for a web to be declared harmful (or we are completely sure it is safe) and we have strong evidence for a web to be considered harmful. We should take some action in the latter and not alert the user in the former but, what should we actually do? Not sure yet, honestly.

For instance, in case of strong evidence, should the user agent prevent the user from accessing the web site? Or should it automatically revoke some permissions and query the user again? Could the user decide to ignore the warnings?

There are more gaps to fill starting by providing a formal definition of harmful. I.e., what does harmful mean in our context? Should a deceptive site be considered harmful from this proposal's point of view? Consider a phishing site with a couple of input tags for faking Gmail login page with a simple POST form and no JavaScript at all... In my opinion, we should not focus on site's honesty but in API abuse. We already have mechanisms for warning about deception and if you want to judge web site reputation, consider alternatives such as My Web Of Trust.

In his response, Anne highlighted the importance of not falling in the CA trap "because that means it's again the browsers that delegate trust to third parties and hope those third parties are not evil".

OpenPGP has the concept of ring (or web) of trust for a decentralized way to grant trustworthiness. What if instead of granting trustworthiness, UAs provide a similar structure to revoke it? Kind of issuing a mistrust certificate.

And finally, there is the inevitable problem related to auditing a web site. The browser could perform some static analysis and provide a per-spec first judgement but what would happen after? Can we provide some kind of platform to effectively allow decentralized reviews by users?

In my ideal world, I imagine a community of web experts providing evidences of API abuse, selecting a fragment of code and explaining why that snippet constitutes an instance of misuse or can be considered harmful, other users can see and validate the evidence. Providing this evidence would be a source of credit and recognition to the same extent as contributing to Open Source projects.

Another bunch of uncomfortable questions arise here, though. What if the code is obfuscated or simply minified? How does the browser track web site versions? Should the opinions of reviewers be weighted? By which criteria?

Well, that's the kind of debate I want to start. Thoughts?